Autonomous, LLM-driven agents are a $4.6 trillion market opportunity, says Foundation Capital. To illustrate their point of AI replacing or augmenting humans, I wrote this article to explain NVIDIA researcher Jim Fan's autonomous "Foundation Agent", as originally conceived by NVIDIA AI researcher Jim Fan.

Jim and his team used GPT4, the Large Language Model behind ChatGPT, to play the video game Minecraft really well. As Noah Kravitz from NVIDIA The AI Podcast said, Jim’s work had “implications for how LLMs might be used going forward to automate and advance all kinds of tasks and processes for industries and applications in the real world.” It outlines a framework for developing autonomous agents through large-scale training across various realities.

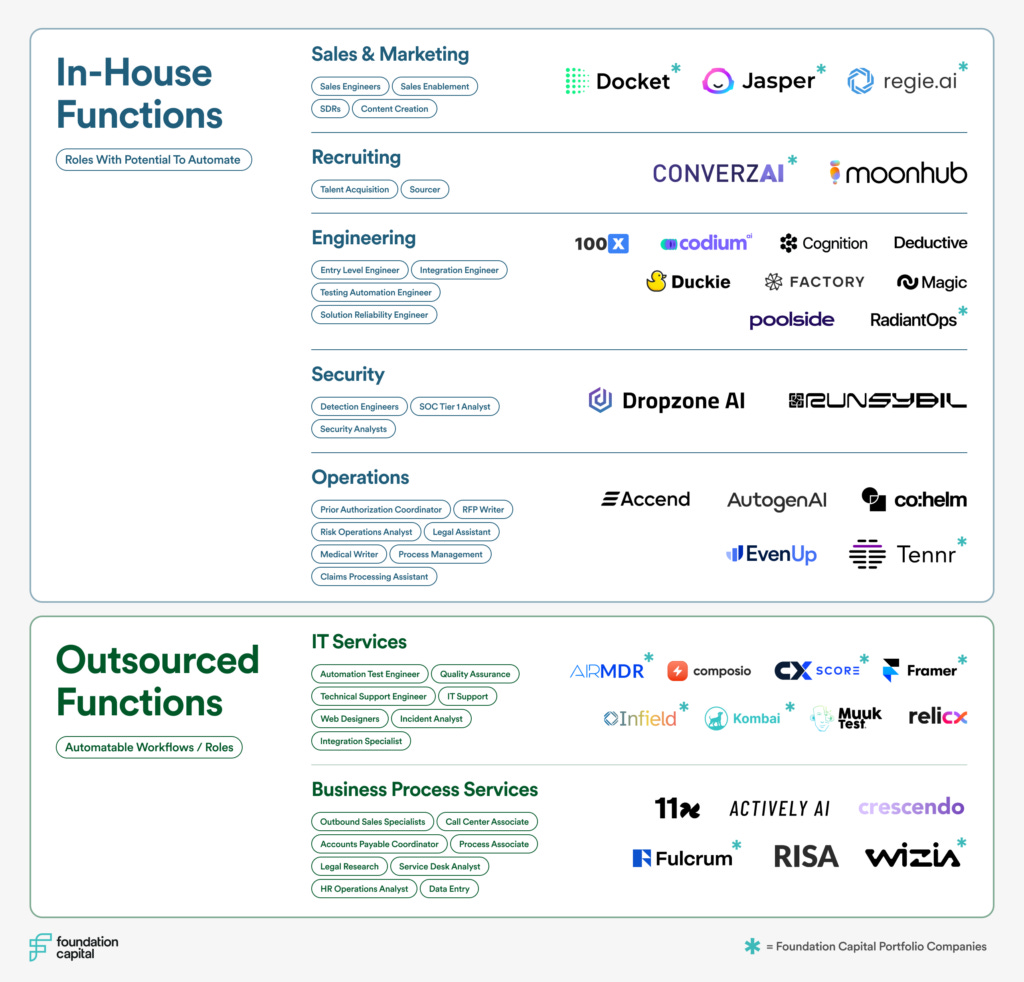

My business inspiration for this note was today’s article by Foundation Capital, AI leads a service-as-software paradigm shift. This article showed a market map for what the authors call a “Service-as-Software” shift that shows the functional roles at companies that she predicts will be automated, along with startups using AI to build these foundation agents to power the shift.

To learn about Foundation Agents, consider the following:

Read the section below on what a Foundation Agent is, i.e. a model that learns how to act in different environments, or "realities". An LLM scales across large amount of text. A Foundation Agent, says author Jim Fan, "scales across lots and lots of realities. If it is able to master 10,000 diverse simulated realities, it may well generalize to our physical world, which is simply the 10,001st reality."

An implementation of Foundation Agents is NVIDIA's Isaac Gym, an open-source, modular and extensible deep reinforcement learning (RL) framework for training agents in simulated environments. It is, presently, a limited stand-alone system that is expressly designed to do batch simulation on NVIDIA's GPU for RL. It exposes a set of APIs designed to allow your code to work with the underlying physics simulation through PyTorch tensors. It is built on top of NVIDIA's Isaac robotics engine, which provides high-performance physics simulation capabilities. Isaac Gym's goal is to make it easier for researchers and developers to experiment with RL algorithms in complex, real-world environments without the need for physical robots. It offers features such as multi-GPU support, a flexible API for creating custom environments, integration with popular RL libraries like OpenAI's Gym, and tools for visualizing and analyzing training results. Isaac Gym can help in advancing research and development in the field of robotics and reinforcement learning.

For solving challenges in enterprise productivity, foundation agents can be created if you use RL frameworks like Isaac Gym, add workflow software, build integrations to enterprise systems such as ERPs, recruiting, customer service, and knowledge bases, and finally, add LLMs to generate communications in text, images and video.

Foundation Agents

Note: I augmented my knowledge of AI agents by using Google Cloud’s Vertex AI

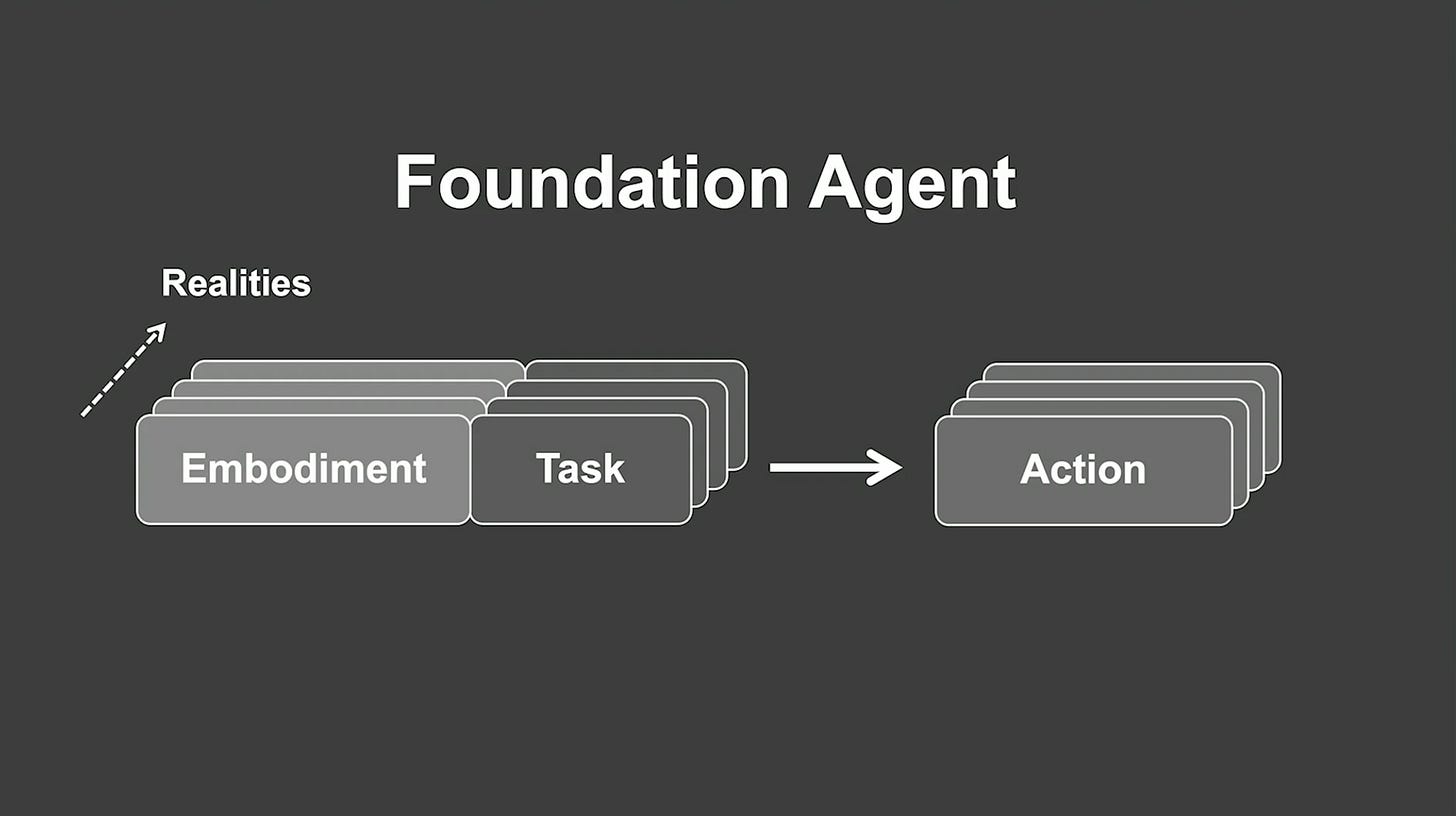

The image below was created by Jim Fan–a Foundation Agent takes as input an "embodiment" and a "task prompt" and outputs "Actions". The Foundation Agent then trains itself by scaling itself up massively across lots and lots of “Realities”, and is the foundation for autonomous agents.

Key Components

Foundation Agent: This central entity acts as the core learning system. It receives input in the form of "Embodiment" and "Task" and produces "Actions" as output.

Embodiment: This refers to the physical or virtual form the agent takes within a specific reality. It could be a robot, a virtual avatar, or any other entity capable of interacting with the environment.

Task: This defines the goal or objective the agent needs to achieve within the given reality. It could range from simple tasks like navigating a maze to complex ones like building a house.

Action: Based on its embodiment and the assigned task, the agent generates a series of actions to interact with the environment and accomplish the goal.

Realities: The agent is not limited to a single environment. It scales itself across numerous realities, each potentially having different physical laws, settings, and challenges. This allows the agent to learn and adapt to a wide range of situations.

Training Process

Deployment: The Foundation Agent is deployed into multiple realities with various embodiments and assigned tasks.

Interaction: Within each reality, the agent performs actions based on its understanding of the embodiment and the task.

Feedback and Learning: The agent receives feedback on its actions, potentially in the form of rewards or penalties based on its success in achieving the task. This feedback is used to update its internal model and improve its decision-making for future scenarios.

Scaling: The process repeats across numerous realities, allowing the agent to accumulate vast experience and learn generalizable skills applicable to different situations.

Implications and Potential

This concept of a "Foundation Agent" holds significant potential for the development of highly adaptable and capable autonomous agents. By training across a diverse set of realities, the agent can learn to solve problems and complete tasks in a wide range of environments. This could lead to breakthroughs in areas such as:

Robotics: Robots that can operate effectively in complex and dynamic real-world situations.

Virtual Assistants: Highly intelligent assistants capable of understanding and responding to user needs across different contexts.

Scientific Discovery: Agents that can explore and experiment within simulated realities to accelerate scientific research and discovery.

Gaming and Entertainment: Creating more realistic and engaging virtual worlds and characters.

Challenges and Considerations

While the concept is promising, there are significant challenges to overcome:

Computational Resources: Training agents across numerous realities requires vast computational resources and efficient algorithms.

Reality Design: Creating diverse and meaningful realities for training is crucial for the agent to learn generalizable skills.

Safety and Ethics: Ensuring the safety and ethical behavior of autonomous agents trained through this method is paramount.

Overall, the "Foundation Agent" concept offers a fascinating glimpse into the future of AI and autonomous systems. As research progresses, it will be interesting to see how this framework develops and impacts various aspects of our lives.

About Neuronn AI

Neuronn AI is a professional advisory group rooted in prominent Artificial Intelligence companies such as Meta. Leveraging extensive expertise in Data Science & Data Engineering, AI Product Management, Marketing Research, Strategic and Brand Marketing, Finance, and Program Management, we offer fractional assistance to startups looking to launch AI-driven product ideas and provide consulting services to enterprises seeking to implement AI to accelerate their outcomes. Neuronn AI's advisors include Alex Kalinin, Carlos F. Romero, Enrique Ortiz, Lacey Olsen, Norman Lee, Owen Nwanze-Obaseki, Rick Gupta, Seda Palaz Pazarbasi, Sid Palani and Vik Chaudhary.